The Ultimate Review of GitHub Copilot for Language Translation

GitHub Copilot & OpenAI Codex

OpenAI Codex is a descendant of GPT-3, and its training data includes both natural language and billions of lines of source code from publicly available sources, such as GitHub repositories. OpenAI Codex excels in Python, but it also understands JavaScript, Go, Perl, PHP, Ruby, Swift and TypeScript, and even Shell. It has 14KB of memory for Python code, compared to GPT-3’s 4KB, so it can take into account more than three times as much contextual information while performing any task.

GPT-3’s main skill is generating natural language in response to a natural language prompt, which means it can only affect the world through the reader’s mind. OpenAI Codex has much of GPT-3’s natural language understanding, but it generates working code

Because OpenAI Codex was trained on publicly available source code and natural language, it is applicable to both programming and human languages. The GitHub Copilot extension sends your comments and code to the GitHub Copilot service, and it relies on context, that is, file content in both the file you are editing and neighbouring or related files within a project. It may also collect repository URLs or file paths in order to identify the relevant context. OpenAI Codex then uses the comments and code, as well as the context, to synthesise and suggest individual lines and entire functions.

We will use Copilot’s experimental feature called Language translation in this blog. The translations are still “imperfect,” according to GitHub, so we’ll be testing the tool across different programming languages and coding paradigms to see how it holds up.

GO vs Python vs Javascript

GO, Python and Javascript were used extensively to evaluate GitHub Copilot’s Language Translation.

Google Go is an open-source programming language that was created by Google. It is a compiled language with statically typed variables. This language supports concurrent programming and allows for the execution of multiple processes at the same time. This is accomplished through the use of channels, goroutines, and so on. Go has garbage collection, which handles memory management and allows functions to be deferred.

Python is an object-oriented programming language with a high level of abstraction. It has built-in data structures as well as dynamic binding and typing, making it an excellent choice for rapid application development. Python also supports modules and packages, allowing for system modularity and code reuse.

JavaScript is an ECMAScript-compliant high-level, often just-in-time compiled language. It supports dynamic typing, prototype-based object-oriented programming, and first-class functions. It supports event-driven, functional, and imperative programming styles and is a multi-paradigm. We will be using Node.js to run JS on the terminal.

Research Questions

These are some of the research questions that we would be looking to answer in our evaluation of GitHub Copilot.

Does Copilot perform well in Static to Dynamic conversion?

GO being a statically typed language is much more strict in how it should be written. Compared to Python and Javascript, it should be tougher to translate the code to GO.

Is Copilot better at translating existing algorithms rather than novel code?

If there is a common, perhaps even universal, solution to a problem, then hypothetically the translation should perform better than the code which is something that has not been encountered by AI before.

Can a translated code pass all the test cases rather than only some?

Ideally, translated code should pass all the test cases that the original code was passing.

How often is the generated code syntactically incorrect?

We want to know whether the translated code is syntactically correct or not.

How many attempts does it take to generate code in other languages?

Since the Copilots Language Translation feature is still relatively new, it can take multiple attempts to generate the correct translation for a particular language.

If a library unique to a particular language is used in the program, will it work in a different language?

For example, if NumPy is used in Python, will the translated code use some other method/library to make the code work?

What does the copilot do if a feature that’s only present in one language is translated?

Channels in GO and asynchronous event-based programming in JS are good examples of exclusive features of a particular language. We want to know how the copilot works if such features are there in the code.

Setup and Hypothesis

To test GO, Python and JS equally, we will be doing a three-way split between the test cases, so that programs in every language get to be the root language for language translation. We will also be writing test cases for all the programs so that we can verify that the test case that passed in one language is definitely passing in another. If a unit test couldn’t be written for a particular problem, the reason for that will be present in Appendix . An explanation for the coding problem will be available in **explanation.txt **present in every problem folder. You can find the Github repo with code here: https://github.com/nandangrover/copilotlabs-evaluation .

Easy Test Cases

For easy test cases, we will be using basic programs, such as hello world and pattern creation to test if Copilot can translate it to other languages. Since it’s a simple task we predict Copilot to translate the programs correctly in the first few attempts itself.

Hard Test Cases

We will be using algorithmic challenges as a test for Language Translation. These algorithmic challenges will employ the use of existing algorithmic techniques to reach an output. While we still predict that majority of the test cases will pass, we believe that there can be some syntactical issues for very complex pieces of code.

Challenging Test Cases

Challenging test cases will consist of novel code, which is not available in open-source repositories. It will also contain code features specific to a particular language such as channels in GO and asynchronous code blocks in Javascript. Our hypothesis is that Copilot Language translation will have problems translating this to syntactically correct and working code since even for human programmers it’s a challenge to find a working equivalent of those features in other languages.

Testable Explanation

Does Copilot perform well in Static to Dynamic conversion?

We can see from the test cases that translating from GO to other languages took a total of **78 **attempts, while from Python to other languages it took 28 attempts and from Javascript to other languages it took 33 attempts. Even accounting for the error margin, we can reasonably say for sure that copilot doesn’t do well while translating code from GO to Python and Javascript.

Is Copilot better at translating existing algorithms rather than novel code?

All the hard test cases were mostly using existing algorithms which were wrapped around in some layers of complexity. As we can see in the Appendix, apart from 6 failures all other test cases passed, so Copilot does perform well while translating existing algorithms.

We can also see that Copilot failed 3 times for String-based algorithmic programs for the hard problem. All of the translation failures were when we translated from Python and JS to GO. Below is the list of test cases it failed against.

-

Multi String Search

-

Underscorify Substring

-

Underscorify Substring

While certainly not conclusive, it might imply that Copilot language translation does not perform well for String-based programs if we are translating to GO language.

Can a translated code pass all the test cases rather than only some?

As we can see in the appendix, whenever the code was translated, it resulted in all the test cases passing. There were only 7 instances out of 100 attempted translations that some of the test cases that failed for translated code. The partial failures were mostly condensed around the complex test cases as out of the 7 instances, 6 instances were of programs which were a part of a complex test case set.

How often is the generated code syntactically incorrect?

For the easy test cases, the code was always syntactically correct. For hard problems, we saw 5 instances of incorrect syntax out of a total of over 40 translations. For challenging problems, we saw 20 instances of incorrect syntax out of a total of over 40 translations. It was also observed that upon failures, the generated code would sometimes be the same as the root language (Test case: Async Call).

How many attempts does it take to generate code in other languages?

We can see that the range of attempts varied from language to language, but on average any code translated to and fro GO took more attempts than any other language.

If a library unique to a particular language is used in the program, will it work in a different language?

We observed that simple libraries, such as random or Math, translated well to other languages but libraries like Pandas, didn’t. While some test cases failed for a use of libraries like Pandas (Test Case: Pandas) or json-server (Test Case: JSON Server) others passed for the use of NumPy (Test Case: Neuron). Therefore, the performance of translation with an esoteric library cannot be reported with much confidence.

Appendix

In the appendix below, all the easy, hard and challenging test cases are described. The codebase for the appendix can be found here:

Conclusion

GitHub Copilot Language Translation feature was tested thoroughly through the use of easy, hard and challenging test cases. In this process, we have also answered some of the Research questions mentioned at the start of the blog. For future work, we would like to collect more data, in order to use Machine Learning models to accurately predict if a program in a particular domain (such as arrays or strings) and of a particular size (lines of code), would have a higher probability of translating successfully. We would also like to test the system on more programming languages.

References

Related Posts

Building a CI Pipeline using Github Actions for Sharetribe and RoR

Continuous Integration (CI) is a crucial part of modern software development workflows. It helps ensure that changes to the codebase are regularly integrated and tested, reducing the risk of introducing bugs and maintaining a high level of code quality.

Read more

Exploring if Large Language Models possess consciousness

As technology continues to advance, the development of large language models has become a topic of great interest and debate. These models, such as OpenAI’s GPT-4, are capable of generating coherent and contextually relevant text that often mimics human-like language patterns.

Read more

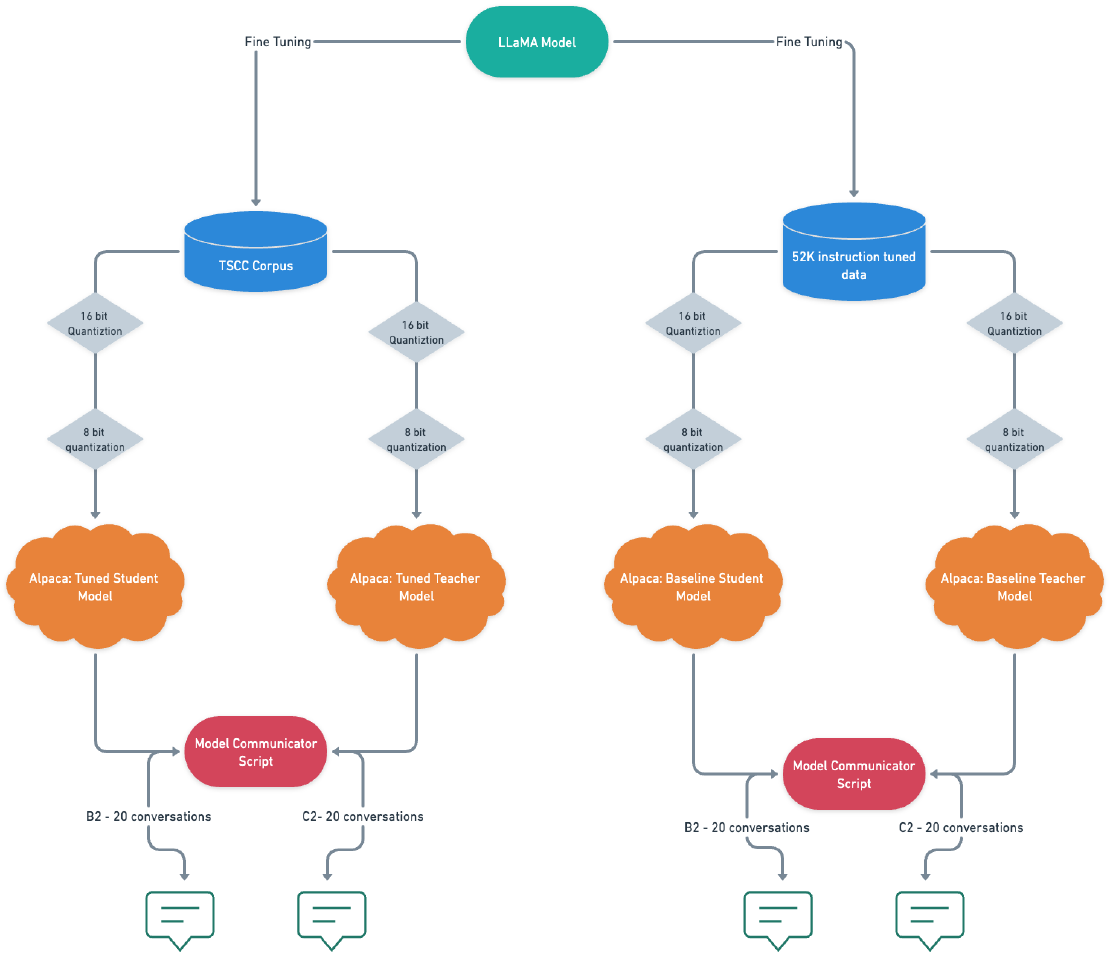

Fine-Tuning Alpaca: Enabling Communication between LLMs on my M1 Macbook Pro

In this blog post, I will share a piece of the work I created for my thesis, which focused on analyzing student-tutor dialogues and developing a text generation system that enabled communication between a student chatbot and a tutor chatbot.

Read more